Today, I am back with a very useful and must aware blogging term that is Robots.txt.

In blogger it is known as Custom Robots.txt that means now you can customize this file according to your choice. In today’s tutorial, we will discuss this term in deep and come to know about its use and benefits. I will also tell you how to add custom robots.txt file in blogger.

So let start the tutorial.

What is Robots.txt?

Robots.txt is a text file which contains few lines of simple code. It is saved on the website or blog’s server which instruct the web crawlers to how to index and crawl your blog in the search results. That means you can restrict any web page on your blog from web crawlers so that it can’t get indexed in search engines like your blog labels page, your demo page or any other pages that are not as important to get indexed. Always remember that search crawlers scan the robots.txt file before crawling any web page.Each blog hosted on blogger have its default robots.txt file which is something look like this:

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

Explanation

This code is divided into three sections. Let’s first study each of them after that we will learn how to add custom robots.txt file in blogspot blogs.- User-agent: Mediapartners-Google

- User-agent: *

- Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

This code is for Google Adsense robots which help them to serve better

ads on your blog. Either you are using Google Adsense on your blog or

not simply leave it as it is.

This is for all robots marked with asterisk (*). In default

settings our blog’s labels links are restricted to indexed by search

crawlers that means the web crawlers will not index our labels page

links because of below code.

Disallow: /searchThat means the links having keyword search just after the domain name will be ignored. See below example which is a link of label page named SEO.

http://www.bloggertipstricks.com/search/label/SEOAnd if we remove Disallow: /search from the above code then crawlers will access our entire blog to index and crawl all of its content and web pages.

Here Allow: / refers to the Homepage that means web crawlers can crawl and index our blog’s homepage.

Disallow Particular Post

Now suppose if we want to exclude a particular post from indexing then we can add below lines in the code.

Disallow: /yyyy/mm/post-url.html

Here yyyy and mm refers to the publishing year and month

of the post respectively. For example if we have published a post in

year 2013 in month of March then we have to use below format.

Disallow: /2013/03/post-url.htmlTo make this task easy, you can simply copy the post URL and remove the blog name from the beginning.

Disallow Particular Page

If we need to disallow a particular page then we can use the same method

as above. Simply copy the page URL and remove blog address from it

which will something look like this:

Disallow: /p/page-url.html

This code refers to the sitemap of our blog. By adding sitemap link here

we are simply optimizing our blog’s crawling rate. Means whenever the

web crawlers scan our robots.txt file they will find a path to our

sitemap where all the links of our published posts present. Web crawlers

will find it easy to crawl all of our posts. Hence, there are better

chances that web crawlers crawl all of our blog posts without ignoring a

single one.

Note: This sitemap will only tell the web crawlers about the

recent 25 posts. If you want to increase the number of link in your

sitemap then replace default sitemap with below one. It will work for

first 500 recent posts.

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500If you have more than 500 published posts in your blog then you can use two sitemaps like below:

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

Adding Custom Robots.Txt to Blogger

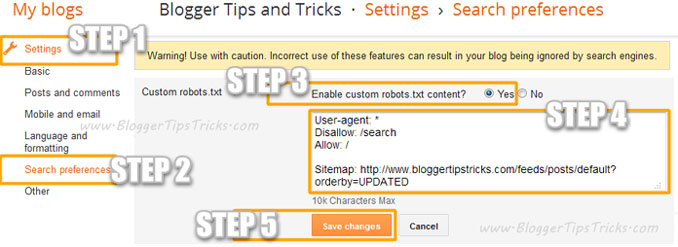

Now the main part of this tutorial is how to add custom robots.txt in blogger. So below are steps to add it.- Go to your blogger blog.

- Navigate to Settings >> Search Preferences ›› Crawlers and indexing ›› Custom robots.txt ›› Edit ›› Yes

- Now paste your robots.txt file code in the box.

- Click on Save Changes button.

- You are done!

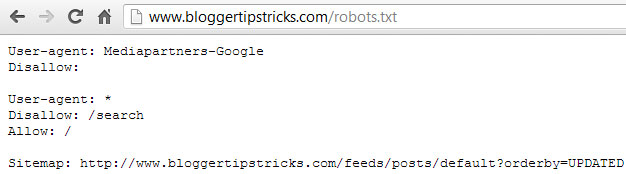

How to Check Your Robots.txt File?

You can check this file on your blog by adding /robots.txt at last to your blog URL in the browser. Take a look at the below example for demo.http://www.bloggertipstricks.com/robots.txtOnce you visit the robots.txt file URL you will see the entire code which you are using in your custom robots.txt file. See below image.

Dear readers, after reading the Content please ask for advice and to provide constructive feedback Please Write Relevant Comment with Polite Language.Your comments inspired me to continue blogging. Your opinion much more valuable to me. Thank you.